Adding Color

I settled on using more computer vision to solve the depth problem. The problem is that thresholding just on depth means that your body cannot be in the window at all, which is difficult if you want to be able to have a large window. The gesture recognition program that I am using detected small hand movements right in front of the kinect, I have to track the entire field of view of the kinect, as well as a much larger depth range.

To stop tracking the rest of your hand and body, I added a filter that masks off any pixels in the depth image that are not black in the color image. This code was generated by GRIP for FRC, but I added it to the original handtracking python program.

How it works

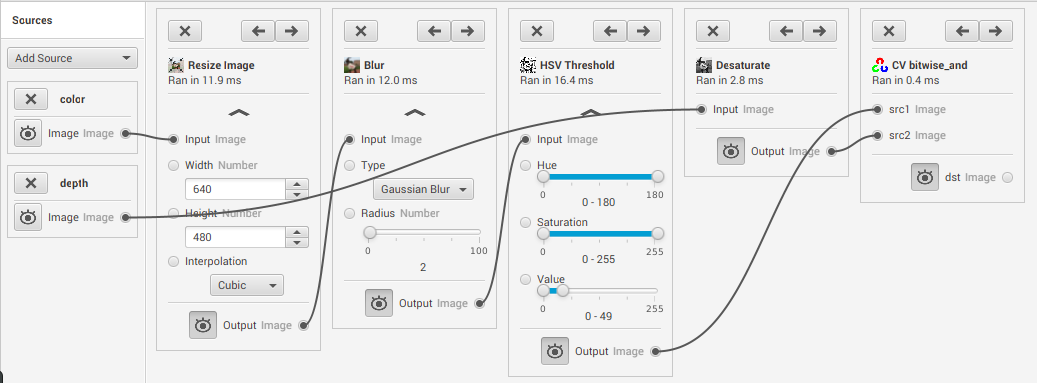

This is the pipeline.

Starting on the left we have the sources. Since this program doesn’t support the kinect, I am using screenshots that I took earlier. Color is the color image, depth is depth image.

The first step is to resize the image to be the same size as the depth image. This way every pixel in the color image corresponds to a pixel in the depth image.

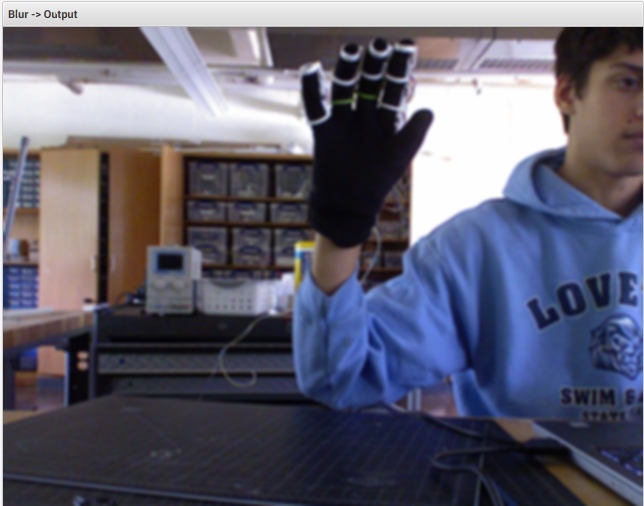

The second step blurs the input photo to reduce noise. This gives a cleaner edge and gets rid of holes for the mask as well.

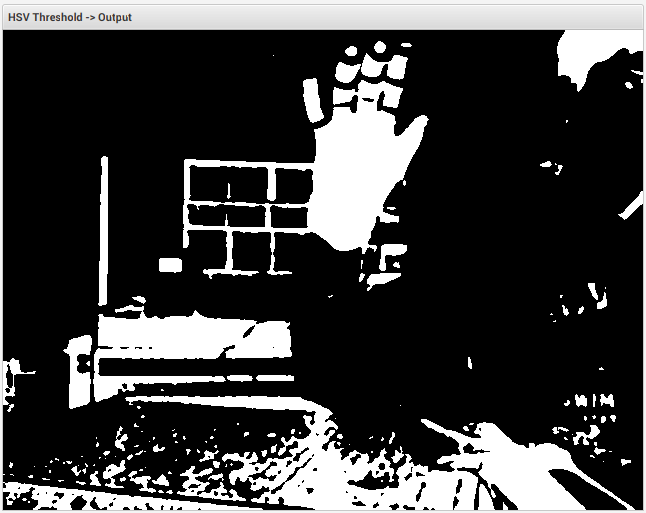

The HSV threshold gets rid of any pixels that are not inside the range of H, S, and V values. All pixels that are left are white. The value is set low because I only want black pixels, and the H and S sliders are set to all values because you can’t really tell what the hue of black is, so I allow all.

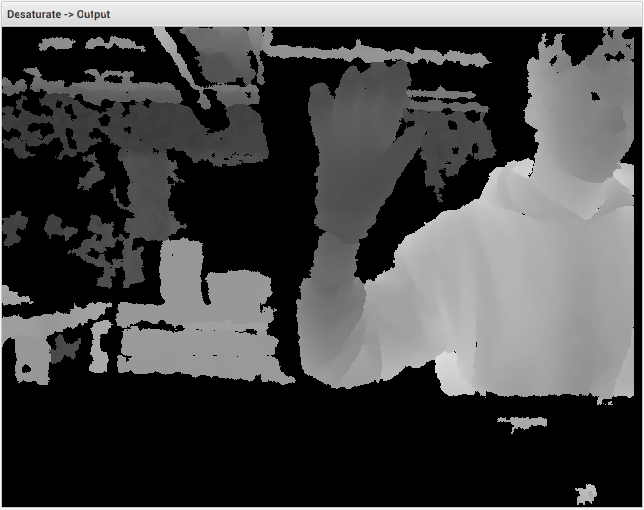

This isn’t really a step, but this is a desaturated color representation of the depth data. It is a black and white version of a color image I posted previously. The values of this image do not correspond to the actual depth, it is just to use as an example.

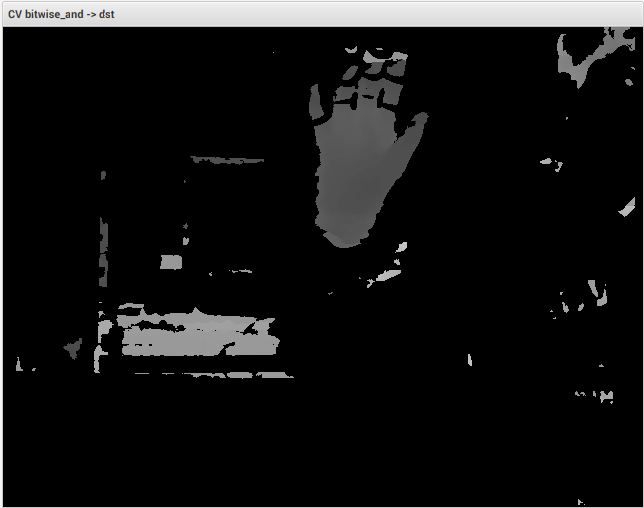

This is what is left after the the mask has been applied. If you look at the last two pictures, Only pixels which were white in the thresholded image are left in the new depth image. This is the image that will be sent to the existing detection program. One thing that could be problematic with this method is that the white plastic pieces split up the outline of the hand. Of course I could just print these out in black plastic, or color them black with a sharpie, but the palm should be big enough and the fingers small enough that the detection will figure everything out.

The pipeline generated from the program turns into just these few lines of code:

(color,_) = get_video() # Get color frame

colorSized = cv2.resize(color,640, 480, 0, 0, cv2.INTER_CUBIC) # resizes the image to the size of the depth image

colorBlur = cv2.GaussianBlur(colorSized, (ksize, ksize), round(radius)) # Blurs the image to reduce noise

colorFiltered = cv2.inRange(cv2.cvtColor(colorBlur, cv2.COLOR_BGR2HSV), (0, 0, 0), (180, 180, 50)) # Runs an HSV filter to get only black pixels

depthMasked = cv2.bitwise_and(colorFiltered, depthThresh) # ands the two images so only the pixels that are black in the color image will stayIn this new video, you can see that even though my arm and hand are all in the same depth range (it all looks red), only the palm gets tracked. This is because the plastic pieces are splitting up the outline into pieces too small to track. It seems to work okay but if you hold the glove so that it can only see the plastic parts, it loses tracking. Paint 3